My first job out of school was with Digital Equipment Corporation, in Palo Alto, California. I worked there from 1987 to 1992, during the heyday (last hurrah?) of UNIX workstations and the X Window System.

Being workaholic young engineers, I and some of my colleagues spent probably too much time fiddling around with interactive X11 programs, collecting them, modifying them, writing some of our own, etc. I covered the catclock version of xclock in my first post to this blog.

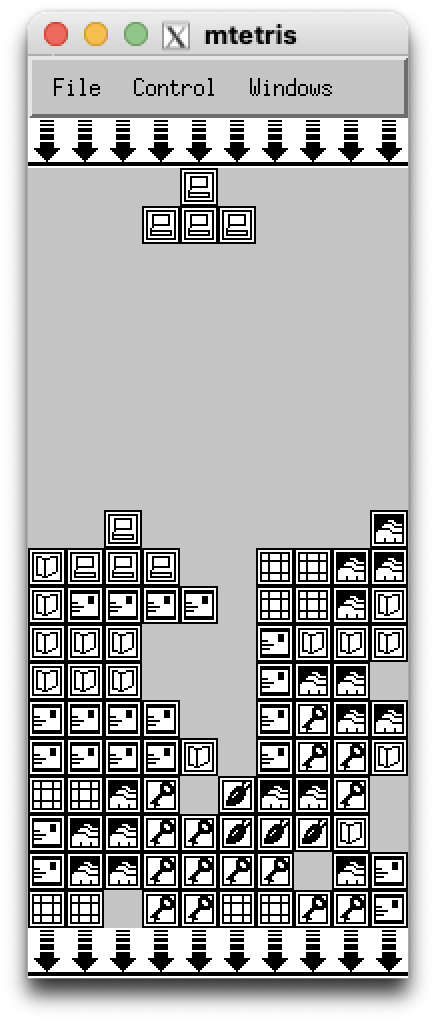

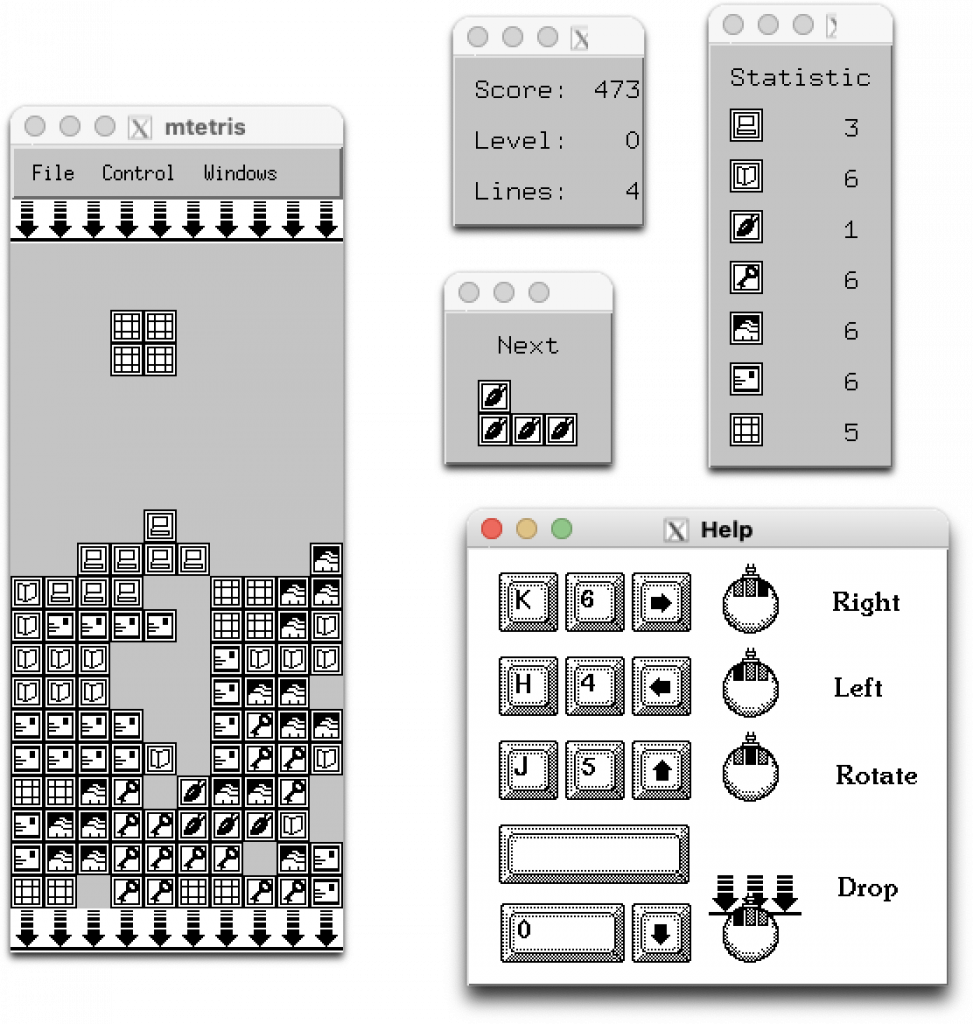

At some point I came across dwtetris , an X11/Motif implementation of the famous (infamous?) Tetris® game. I believe that the story is that it was written for DECWindows by a DEC engineer in Japan, but now nearly 40 years later I don’t recall any details. I’ve spent some time combing the web, but can’t find any trace of the application. If anyone with better search skills can track down the origin of this program, that would be greatly appreciated. In any case, I made some minimal modifications for it to build and run under DEC’s Unix OS, called ULTRIX. I renamed it mtetris. As with the catclock program, I’ve managed to keep it compiling and running, and even have it working on macOS via XQuartz. It’s available in this Github repository.

The implementation is surprisingly full-featured: it includes a number of optionally displayed windows for the score, piece statistics, next piece, and UI help.

For computer history nerds: that round, three-button mouse was actually used for DECStation computers:

And yes, it was rather awful to use, as the top-mounted cord’s stiffness could cause the mouse to rotate a bit whenever you took your hand off, and your next mouse operation would not go in the direction you intended. But at least it had three buttons, making it convenient for interacting with 3D applications.

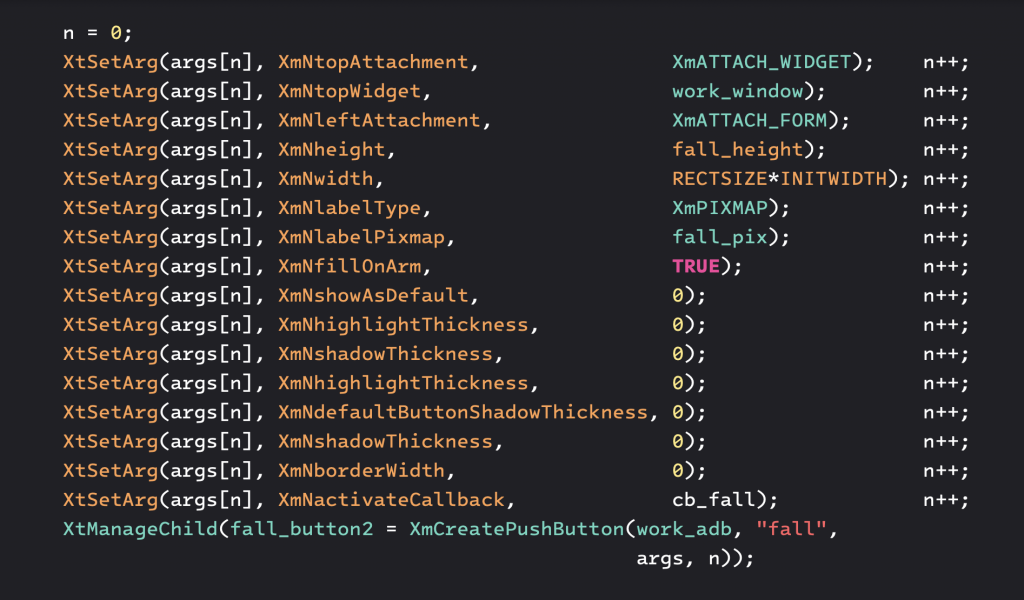

X11/Motif programs were written without the benefit of GUI builders like Xcode’s Interface Builder, so all the UI component construction and layout was done in code. The X11/Motif APIs required quite a bit of typing, so the code base for even small applications like this consisted largely of UI creation code. For example, here’s the implementation of a push button:

In any case, interested parties are welcome to peruse the git repository and see a dandy example of 1980s-90s state-of-the-art programming. Enjoy!